Overlords at the Easel

Artificial intelligence was built on work stolen from artists. It’ll now create work to replace them.

|

Listen To This Story

|

The retail employee who directs you to the self-scanning devices that promise to put him out of work is engaged in economic suicide. But it’s not his choice. He is ordered to do so by his boss.

Human beings who voluntarily develop and perfect artificial intelligence elevate the perverse todestrieb to a nearly incomprehensible scale. AI workers could do anything else. They could do nothing at all. They could sabotage the bots and self-driving cars and autonomous armed drones that will drive us to the unemployment line before blowing off our heads. I do not know what the fact that AI scientists roll up their sleeves and do their best to render us all redundant and obsolete demonstrates about our psychological wiring, but it cannot be good.

As a cartoonist of the early 21st century I am the last of the Mohicans, a direct heir of the first known artists: the Neolithic people whose cave paintings of hunters were discovered by a French boy who tumbled through a hole in the ground in Nazi-occupied France. Drawing for a living under late capitalism is a challenge. Selling political drawings in an era when humor and satire has all but vanished from popular culture is even harder. (Charles Schulz, Rudy Ray Moore, Carol Burnett, Flip Wilson, Dave Barry, Art Buchwald, “Weird Al” Yankovic: None would find work if they were starting out today.) When I began drawing editorial cartoons for syndication three decades ago, there were hundreds of us. Today there are an even dozen. I am 59 years old and I am one of the younger ones.

The cruel gods of artificial intelligence have targeted me and my kind for termination. AI-based text-to-image generators are the latest technological leap that exploitative entrepreneurs are using to make a mockery of copyright and trademark, the fundamental legal protections of intellectual property in the United States. From a user standpoint, the interface is simple. You go to a website and enter some terms, say: “Abraham Lincoln painted by Picasso.” A few seconds later, if the data set is big enough and the algorithms smart enough, out pops a picture representing your request. It’s not exactly cool. But it’s interesting.

There’s nothing inherently wrong with the technology. Honest Abe couldn’t be more in the public domain. We all know what the Picasso style is, although whether we are talking Blue Period or high cubism is an open question. The question is, where does the material — the actual art — originate? What does the computer use to work from?

Adding outrage to fatal injury, AI-based text-to-image generators obtain the data upon which they base their digital creations in contravention of all civilized norms — oh hell, I’ll say it, by breaking (or, their corporate attorneys hope, circumventing) federal copyright law.

As long as all the material is sourced legally and ethically, it’s all good fun. As you can probably extrapolate from the fact that I’m writing about this and I’m a professional complainer, that’s far from the case.

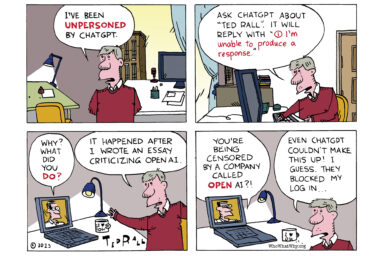

A fellow cartoonist, Sarah Andersen, opened my eyes to how this works. Andersen explained in a recent piece for The New York Times that her copyrighted webcomic (a genre of comic strip distributed exclusively online, typically with a millennial/Generation X cultural sensibility) has been repeatedly, illegally appropriated, without her permission, by “A.I. text-to-image generators such as Stable Diffusion, Midjourney and DALL-E.” Andersen complained that right-wingers appropriated her work, changing her word balloons to offensive, politically reprehensible statements to which she was completely opposed.

That’s awful. Clearly. I know what it feels like; the same thing has happened to me at the hands of some asshole with Photoshop. What raised my concern was Andersen’s observation that AI technology, now in its infancy, will likely improve to the point that it will become difficult to tell the difference between the original creation of a human artist and its machine-generated bastard offspring. If and when that happens, how will I be able to ask a publication to pay me for something they can get for free?

Adding outrage to fatal injury, AI-based text-to-image generators obtain the data upon which they base their digital creations in contravention of all civilized norms — oh hell, I’ll say it, by breaking (or, their corporate attorneys hope, circumventing) federal copyright law. Andersen writes, “The data set for Stable Diffusion is called LAION 5b and was built by collecting close to six billion images from the internet in a practice called data scraping. … So what makes up these data sets? Well, pretty much everything.”

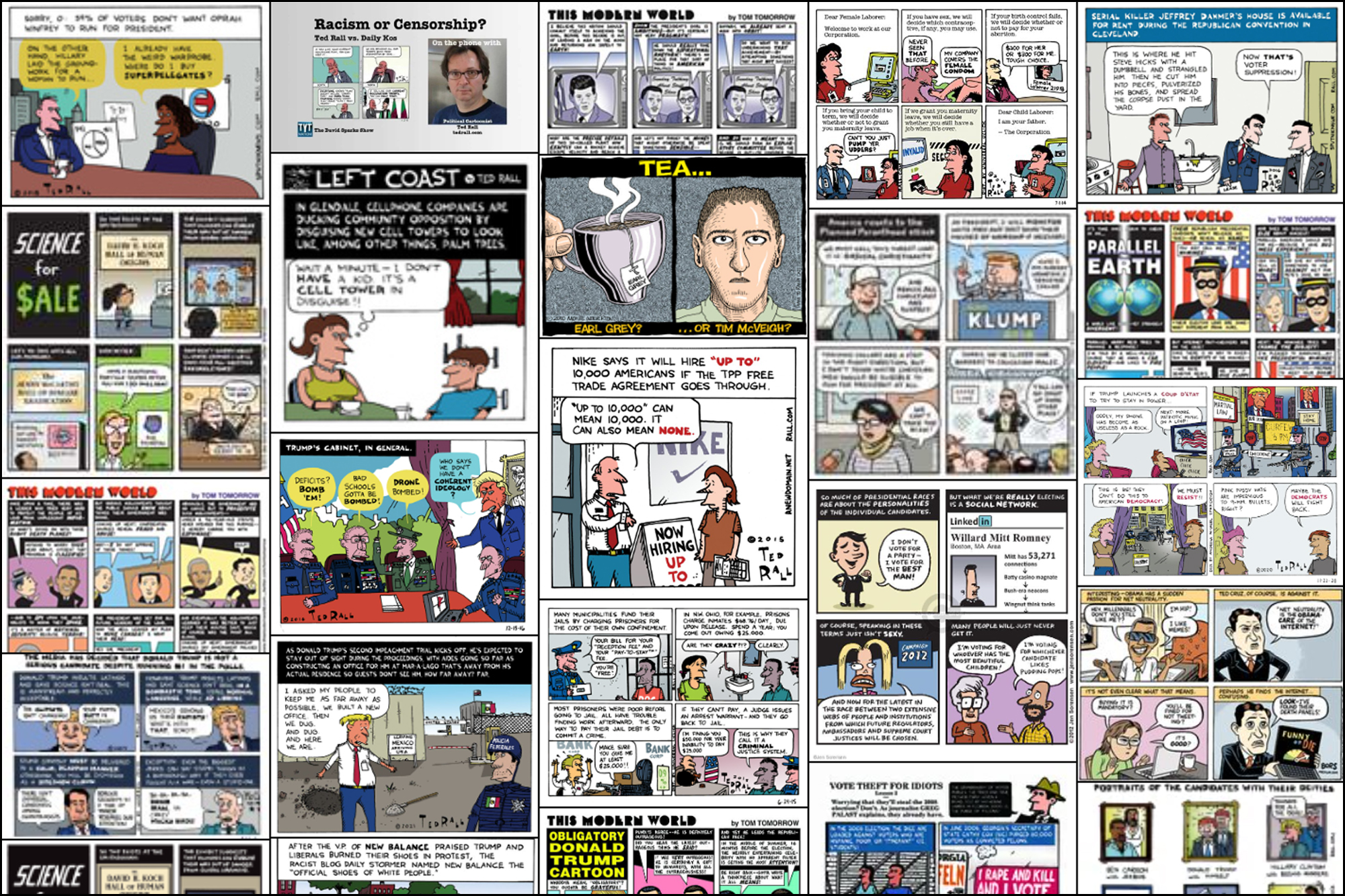

Andersen explains: “When I checked the website haveibeentrained.com, a site created to allow people to search LAION data sets, so much of my work was on there that it filled up my entire desktop screen.” I had the same experience. There they were, so many of my cartoons. Each one took hours to draw. Much as The Human League observed that “It took seconds of your time/To take his life” (about Lee Harvey Oswald), knowing that hundreds of my babies were scraped up in fractions of a second — automatically! no one even looked at my stuff as they stole it! — was highly disconcerting. And violating.

I suspect other professional artists, except for those who are militant libertarians, will soon suffer a similar awakening if they haven’t already. How do companies like Stable Diffusion get away with this wholesale theft of intellectual property?

“Legally,” Andersen writes, “it appears as though LAION was able to scour what seems like the entire Internet because it deems itself a nonprofit organization engaging in academic research. While it was funded at least in part by Stability AI, the company that created Stable Diffusion, it is technically a separate entity. Stability AI then used its nonprofit research arm to create A.I. generators first via Stable Diffusion and then commercialized in a new model called DreamStudio.”

So yucky. So sociopathic. Mentioning the name of the company publicizes them, drives traffic to their website, makes the problem worse. What’s less damaging? Drawing attention to them in the hope that Congress or some other regulatory body notices them, or, less likely, they and their ilk feel shame? Or not talking about this at all on faith in that AI will become the new CB radio? What kind of people think this is OK to do? Whether you process the Zyklon B pellets or congratulate yourself on equating technological advancement with social improvement, perhaps you should withdraw to a distant atoll.

This text-to-image business venture reminds me of the greatest intellectual property heist in human history: Google Books. In 2004, Google partnered with major research libraries to scan tens of millions of books in their collection. Their stated goal was to scan every book in print and out of print, in every format and every language, in order to create a searchable online database of these books.

Believing that because they were Google they didn’t have to follow the law, they ignored the exclusive copyrights — enshrined in the Constitution, no less — of millions of authors, me included, of whom they never requested permission or consent. Given how things turned out, they were correct.

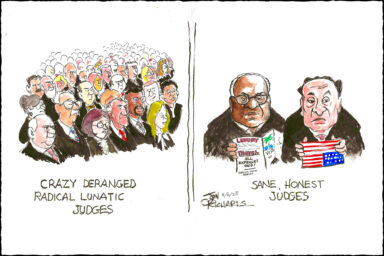

A 2013 Federal Appeals Court ruling, affirmed without comment by the US Supreme Court in 2015, tossed a lawsuit filed by the Author’s Guild and absurdly declared Google’s actions to fall under the category loophole of “fair use,” which typically applies to commentary or parodies of copyrighted material. Mary Rasenberger of the Author’s Guild responded to the SCOTUS ruling: “Authors are already among the most poorly-paid workers in America; if tomorrow’s authors cannot make a living from their work, only the independently wealthy or the subsidized will be able to pursue a career in writing, and America’s intellectual and artistic soul will be impoverished.” In this case, the Supreme Court was parodying an understanding of copyright law.

Every now and then we are called at a critical time to stuff an evil genie back into its bottle before it’s too late. This it’s-just-fun-research chicanery indicates that this is one of those times. Unless someone powerful does something big and does it soon, there could soon be so little new original content being generated that AI would be reduced to cannibalizing a stagnant, static, dead data set. The human imagination will be supplanted by the banal, the self-referential and incestuous — unless AI truly starts to think for itself.

If we lived, as I would prefer, in a communitarian society in which all the fruits of labor and the bounty of the earth were shared as equally as possible, I would feel privileged to share everything I created with anyone who wanted to look at it without the slightest concern for remuneration. In a capitalist society, however, possession and the ability to secure one’s property and work product is essential in order to survive. Generosity and liberal dispensation of labor may be admirable, but those who overindulge them are in danger of starving to death. We lock our doors, take our car keys with us, don’t let fellow students cheat off our exam answers.

And artists, professional ones, don’t give their work away. I rent the use of my work as my landlord rents the cube in which I live; either of us may choose not to do so. Everyone has the right to control their creative output. We have to have the right, for American artists and other creative workers do not receive government subsidies, as is common in other countries. Under this lamentable capitalism, stealing the fruits of the human imagination is a crime against civilization.

My father, a lauded aeronautical engineer, thought and talked a lot about technology. One thing he used to say — that I’m not sure I agree with — is that once a technological advancement or development becomes possible, it’s inevitable. If Oppenheimer doesn’t build that bomb, some Nazi or Soviet scientist will. Maybe, maybe not. Al-Qaeda wanted to, badly, and could not. But society can decide not to develop or disseminate a technology after it’s been conceived. Mines are very easy to build, yet most of the world (which, as is the case far too often, doesn’t include the US) has come together to ban them. Ditto for biological and chemical weapons.

No one can reverse the advance of technological innovation. AI is here to stay. But we decide how to use it and regulate it. Artists need not be cast upon the pyre of progress.

Copyright and trademark protections have survived the printing press, photocopiers, Napster, and previous forms of cut-and-paste digital reproduction. Laws and regulations can and should form the basis of a body of case law that prohibits the exploitation of creative people for the benefit of the tech elite. Exceptions may be made for AI-based generators that are kept private at academic and other research facilities, not made available to the public.

My dad’s other main point, I think, is tougher to nitpick. Technology, he argued, is inherently neutral. Splitting the atom can be used to blow things up, but it can also power cities more cleanly than many other energy sources. Knives murder and also save lives. Cars fueled by internal combustion engines maim pedestrians; ambulances powered by the same means spirit fallen victims to the hospital. Drones kill people; drones find those who are lost in the wilderness. Artificial intelligence, I am certain, is neutral as well. We merely need to control it.

But nothing will happen unless we fight together.

First Amendment and free speech organizations, artists, journalists, and other creative people must come together, lobby Congress for regulatory controls, and file lawsuits against companies that infringe upon copyright in order to wage an existential battle against the SkyNet of the art world. Unless Congress acts quickly and decisively, creative people in every field you can think of will be unable to distinguish their work from computer-generated knockoffs, radically curtailing their ability to command payment for their labor — and to lift the human spirit.

Ted Rall draws, writes, and thinks for money.